Looking for the latest Azure AI news September 2025 then you are in the right place with Dynamics Edge where you can learn well on how to become a microsoft azure solutions partner 2025 and much more about modernized OpenAI and other services in the Azure ecosystem.

The preview integration with Azure Key Vault allows Foundry projects to securely pull secrets, keys, and credentials without hardcoding them. Learn more with Azure AI Foundry Training December 2025 AI-3016 course. Previously, developers had to manage secrets manually or embed them in environment variables, raising the risk of leakage. Now, with Key Vault connections available in Foundry, applications and agents can request sensitive values dynamically and in compliance with enterprise security standards. This reflects Microsoft’s priority on governance: as AI apps move from prototypes to regulated industries, keeping secrets locked down while still accessible to models becomes non-negotiable. For organizations already invested in Azure security tooling, this change makes Foundry fit more naturally into their compliance story.

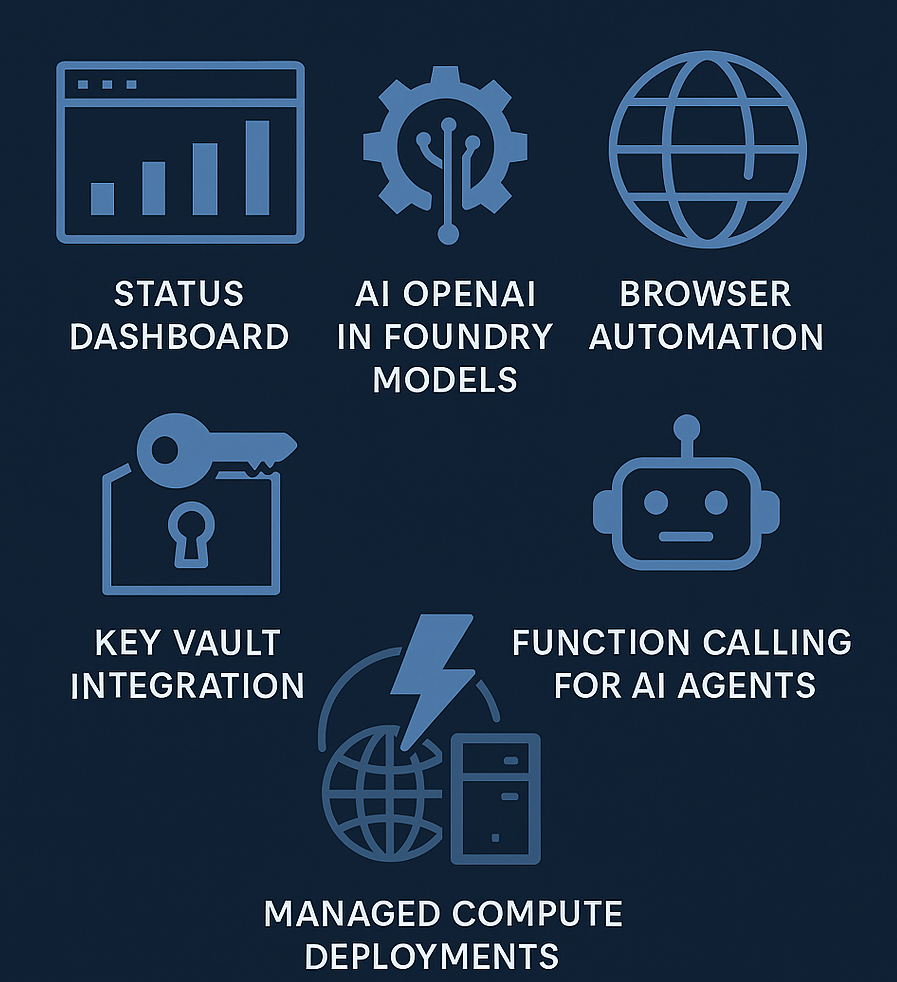

Azure AI Foundry Status Dashboard (Preview)

A new Azure AI Foundry Status Dashboard provides a central, real-time view of system health across all Foundry services. Instead of relying on general Azure status pages or sifting through logs, teams now get a Foundry-specific dashboard with live status indicators, incident reports (with timelines and root-cause analysis), and historical uptime for core services. This preview feature allows developers and enterprises to quickly distinguish local configuration issues from broader platform incidents. The dashboard also supports subscriptions (email, SMS, webhook) for alerts on outages or maintenance, enabling faster reaction to disruptions and reducing downtime. By surfacing metrics in a human-readable way, it bridges the gap between traditional ops monitoring and developers’ workflow, which is especially useful for organizations running multiple AI models and agents in production.

Azure OpenAI Models v1 REST API Reference Integration

Azure AI Foundry has integrated the Azure OpenAI Models v1 REST API reference directly into its documentation and portal experience. In practical terms, this means developers no longer need to switch between external Azure OpenAI documentation and Foundry’s docs when building or deploying models. The full schema and reference for Azure OpenAI’s REST endpoints (now versioned as v1) are available alongside other Foundry docs. This unification of documentation reduces friction in development and ensures that API calls and parameters are aligned with the latest Foundry capabilities. In short, Foundry is positioning itself as the one-stop platform – not just a “lab” – for hosting, fine-tuning, and managing Azure OpenAI models. Developers benefit from a single reference point that stays up-to-date with Foundry’s evolving API lifecycle (GA and preview endpoints), leading to more consistent and error-free integration of OpenAI models into their applications.

Browser Automation Tool (Public Preview)

The Browser Automation tool has entered public preview, allowing AI agents in Foundry to interact with web pages programmatically through a real browser environment. This goes beyond simple web scraping – under the hood it uses a Microsoft Playwright-powered headless browser to mimic real user actions, such as navigating pages, clicking buttons, filling forms, and even performing complex multi-step workflows. For enterprise scenarios, this opens up new possibilities: an AI agent can now interface with legacy web applications that have no APIs, or automate tasks in third-party web services, by essentially “driving” the UI like a human would. The browser automation runs within the user’s Azure subscription via a managed Playwright workspace, so teams don’t have to provision VMs or maintain custom browser infrastructure. While still experimental (preview), this feature signals Foundry’s evolution into an agentic development environment – one where AI agents can not only generate content but also take actions in the world (e.g. booking appointments on a website or extracting data from a partner portal). Microsoft emphasizes that it’s early-stage, but its availability highlights a broader trend: AI workflows can now extend into interactive web automation, bridging AI with the vast ecosystem of web-based systems.

4. Azure Key Vault Integration (Preview)

Azure AI Foundry now offers a preview integration with Azure Key Vault to manage secrets and credentials securely. With this feature, teams can connect a Key Vault to their Foundry resource so that API keys, connection strings, and other sensitive values are pulled dynamically at runtime instead of being hard-coded or stored in plain text. By default, Foundry already used a managed Key Vault for storing connection secrets; the new integration lets organizations bring their own Key Vault and have Foundry use it as the centralized secrets store. All project-level and resource-level secret references can be managed through one vault connection. This significantly reduces the risk of secret leakage and ensures compliance with enterprise security policies. In practice, an AI agent or app in Foundry can retrieve credentials (for example, a database password or an API token) on the fly via secure references, without developers exposing those secrets in code or configuration. This enhancement reflects Microsoft’s focus on governance and “security by design” – as AI solutions move into production and regulated industries, robust secret management is essential. For organizations already invested in Azure security tooling, this makes Foundry fit more naturally into their compliance story by leveraging familiar Key Vault practices.

GPT-5 Model Family Arrives in Foundry (August 2025 Update)

One of the biggest recent updates is the introduction of the GPT-5 model family into Azure AI Foundry. The August 2025 release added several GPT-5 variants: gpt-5 (the flagship model), along with gpt-5-mini, gpt-5-nano, and gpt-5-chat. The main GPT-5 model offers next-generation reasoning capabilities with support for extremely long context windows (up to ~272K tokens), suited for complex, long-horizon tasks. The gpt-5-chat variant is a multimodal conversational model (handling text and other modalities) with around 128K token context, ideal for rich interactive dialogues. Meanwhile, Mini and Nano are lightweight GPT-5 versions focusing on real-time responsiveness: gpt-5-mini is optimized for fast tool-calling and low-latency interactions, and gpt-5-nano provides ultra-low latency for quick Q&A or simple tasks. It’s worth noting that access to gpt-5 (full model) is gated – developers must request access (registration required) due to its high resource usage and preview status. The smaller variants (mini, nano, chat) are open to use without special registration. Alongside the new models, Microsoft introduced “freeform tool calling” in GPT-5: this allows the model to output raw action payloads (like Python code, SQL queries, etc.) directly to tools without strict JSON schemas. This flexibility eliminates the rigid format constraints and reduces integration overhead when GPT-5 works in concert with external tools. Overall, the arrival of GPT-5 in Foundry (with its associated tools and requirements) marks a significant expansion of Foundry’s model catalog, positioning the platform for more advanced use cases that leverage GPT-5’s extended context and reasoning abilities.

Expanded Function Calling for Azure AI Agents

Azure AI Foundry’s function calling capabilities for AI Agents have been expanded, enabling more reliable orchestration of tasks across tools and APIs. Function calling allows developers to define structured functions that an AI agent can invoke as part of its reasoning process. In practice, you describe to the model what functions are available and their expected arguments; during a conversation or task, the agent can decide to call those functions and get back structured results. This update means agents no longer have to reply with free-form text that downstream code must parse to trigger actions – instead, the agent can directly call an external function (with the proper arguments) as a first-class step in its workflow. By having the model know when and how to hand off a query to a function (for example, a database lookup or a calculator API), developers eliminate guesswork and brittle parsing logic. Microsoft notes that this leads to more predictable and robust agent behavior, since outputs from tools come back in a consistent structured format rather than unstructured text. Scenarios like customer support bots, financial assistants, or IT automation benefit greatly: the agent can execute multi-step operations (querying systems, updating records, sending emails, etc.) with higher reliability, effectively acting like a junior developer that knows when to call an API. Function calling in Foundry is supported across the SDKs and REST API, and although the portal UI may not support defining functions yet (in preview), it’s fully available to code-first workflows. In short, this feature is another step toward production-ready AI “employees” – agents that can safely and seamlessly work across various systems using well-defined functions, without requiring complex glue code for each action. As one Microsoft workshop put it, “Function calling allows you to connect LLMs to external tools and systems,” empowering deep integrations between your applications and the AI.

Updated Quotas & Limits for Azure OpenAI Models

Azure AI Foundry’s documentation for quotas and limits has been updated to reflect new model families (like GPT-5) and increased capacity for enterprise workloads. These updates clarify how much throughput (requests per minute, tokens per minute, etc.) each model deployment can handle, and they introduce specific tables for the GPT-5 series’ usage limits. For example, GPT-5’s higher resource profile comes with defined throughput limits to ensure fair usage across the multi-tenant platform. By publishing these limits transparently, Microsoft is signaling that Foundry is ready to support heavier production workloads – teams can plan scaling knowing the exact limits rather than hitting hidden ceilings unexpectedly. The updated quotas also include details on “capacity unit” ratios for the new model tiers and the Model Router (which now supports routing to GPT-5 models). In fact, the Model Router service was enhanced to handle GPT-5 – allowing intelligent routing between model versions for cost or quality optimization – albeit with limited availability pending access requests. Enterprises pushing large GPT-5 or multimodal jobs (e.g. batch processing, streaming conversations) will especially benefit from the higher and clearer limits, as it prevents stalls due to throttling. Additionally, a new Capacity API was introduced to let developers programmatically check regional availability and capacity for models, aiding in deployment planning. All together, these quota and capacity changes help organizations confidently scale up their AI applications on Foundry by knowing the boundaries and how to request more capacity if needed, thus reducing downtime or performance bottlenecks in mission-critical deployments.

Documentation Updates: Models, Responses API, Capability Hosts & Reasoning

Accompanying the feature updates, Azure AI Foundry’s documentation has been overhauled in several areas – bringing previously scattered concepts into a more coherent framework. Key updates include:

- Responses API (General Availability) – The Azure AI Responses API is now GA and the docs have been updated to guide developers on building stateful, multi-turn conversations with a single API call. This API automatically maintains conversation state and can coordinate multiple tool calls with model reasoning in one flow, supporting the latest Azure OpenAI models (including GPT-4 and GPT-5 series). The GA status and updated docs mean developers can rely on the Responses API for production scenarios, getting structured, predictable outputs instead of ad-hoc chat completions. This standardization simplifies building complex agents because the model’s reasoning and tool usage are abstracted into a consistent response schema.

- Capability Hosts – New documentation introduces the concept of capability hosts, which are essentially packages for tools or resources that an agent can use. This formalizes how developers can extend agents with custom tools (for example, a proprietary database connector or an internal API) in a secure and manageable way. The guidance covers how to host these capabilities (possibly as services or containers), how to secure them, and how to register them with the Foundry agent framework. By defining “capability hosts,” Foundry reduces ambiguity around what tools an agent can access and provides a clear pattern to plug in new functionalities. It’s a step towards modularizing agent skills so they can be deployed and governed more easily.

- Reasoning Models and Tools – Documentation for Azure OpenAI reasoning models (the models geared towards chain-of-thought and decision-making tasks) has been updated. This likely includes clarification on how these models (like the GPT-4/5 series with reasoning capabilities) should be used in tandem with tools and the Responses API. The overall theme is that Azure AI Foundry is moving beyond pure text generation; it’s emphasizing planning and multi-step reasoning as first-class citizens. With the combination of structured tool use (function calling, capability hosts) and guided reasoning (via the Responses API and specialized models), the platform is charting a clearer path for developers to build autonomous or semi-autonomous agents. These agents can carry out complex tasks (think: break down a goal into sub-tasks, invoke tools for each, and accumulate knowledge) in a predictable way, thanks to the now unified approach in the docs and APIs. For teams experimenting with such advanced agents, the improved documentation and conceptual consistency reduce the learning curve and integration effort.

In summary, the doc updates around models, APIs, and capabilities ensure that all these powerful features (from GPT-5 to function tools) fit together logically. This helps developers compose solutions where an AI agent can reason, plan, and act using a structured, well-documented framework rather than a collection of disparate features.

Enhanced Enterprise Infrastructure: Managed Compute & Network for Hubs

Azure AI Foundry is also strengthening its enterprise infrastructure options by introducing managed compute deployments and managed network support for Foundry hubs. Instead of manually provisioning and scaling their own compute clusters for model hosting or agent execution, teams can now use Managed Compute Deployments – a service where Foundry will deploy models onto managed infrastructure on demand. This gives organizations the benefit of auto-scaling and maintenance-free operation for their AI deployments, similar to a PaaS model, while still maintaining control through Foundry’s interface. Likewise, the Managed Network for Hubs feature allows an Azure AI Foundry hub (which organizes projects) to be configured with secure networking, such as VNet injection and private endpoints, without complex user setup. The updated documentation clarifies that enabling a managed network will isolate the Foundry resources from public internet access except through approved outbound rules. This is crucial for companies in regulated industries or those with strict data residency requirements – it means all model inference and agent traffic can be kept within controlled network boundaries. The docs note that managed network mode is hub-scoped and, once enabled, is irreversible (to ensure no accidental exposure). Additionally, new roles like an Enterprise Network Connection Approver have been introduced to govern network changes. Altogether, these infrastructure updates allow enterprises to “lift and shift” AI workloads into Foundry with confidence: they can achieve isolation, security, and compliance comparable to their existing IT standards, but with far less effort due to the managed nature of these features. It transforms Foundry from a development playground into a production-grade platform capable of handling sensitive, large-scale deployments (with auditing, cost control, and high availability in mind).

Improved File Handling and Data Integration

Working with data has become easier in Azure AI Foundry thanks to new features for file handling and search integration. First, Foundry now allows direct file uploads via the File Search tool in the agent ecosystem. This means developers can quickly add documents or knowledge base files to an agent’s context without building a separate ingestion pipeline – the files can be uploaded and then searched or retrieved by the agent at runtime. Second, there is support for linking existing Azure AI Search indices to Foundry’s tools. If an organization already has a populated Cognitive Search (Azure AI Search) index with their enterprise data, they can connect that index to an Azure AI Foundry agent as a knowledge source with just configuration. The agent can then use the Azure AI Search tool to query that index in response to user questions, a technique often called grounding or Retrieval-Augmented Generation. Together, these improvements dramatically simplify setting up an agent that has access to company-specific information. For example, instead of manually ingesting PDFs or SharePoint documents into the agent’s memory, a developer can either upload the files directly or plug in an index that’s kept up-to-date elsewhere. This not only saves time but also helps reduce hallucinations by ensuring the AI agent references factual, up-to-date data from the connected sources. Microsoft’s direction here essentially turns Foundry into a “knowledge-ops” platform: it acknowledges that keeping AI models grounded in the right data is as important as the model itself. By natively supporting enterprise search indices and file retrieval, Foundry enables a tighter loop between organizational knowledge stores and AI reasoning. Teams can more rapidly build RAG (Retrieval-Augmented Generation) workflows with minimal plumbing, thus keeping their AI assistants accurate and relevant to the business’s current information.

Preview Tools Expansion: Code Interpreter, MCP, and Bing Search Grounding

Several cutting-edge preview tools have been added or expanded in Azure AI Foundry, showcasing the next generation of agent capabilities:

- Code Interpreter (Python) – Foundry now offers a Code Interpreter tool (in preview) similar to OpenAI’s popular ChatGPT Code Interpreter. This allows agents to execute Python code in a sandboxed environment during a conversation. Essentially, an AI agent can write and run code on the fly to solve a problem (e.g. data analysis, format conversion, calculations) and return the results. In an enterprise context, this is powerful for tasks like analyzing CSV data provided by a user or generating charts – all under the AI’s control but within a governed sandbox. The enterprise-grade implementation in Foundry ensures scalability and logging, so organizations can trust and monitor what code the AI is executing.

- Model Context Protocol (MCP) – The Model Context Protocol tool (preview) allows external systems to inject context or state into an agent’s run dynamically. MCP is essentially a way for an AI agent to be guided or to share context with other processes beyond just the prompt. With the MCP tool, developers can set up an external context provider (perhaps a long-running knowledge base, or a real-time sensor feed) that the agent can query as needed. The preview samples for MCP have been refreshed, indicating its growing importance. This capability makes integration more flexible – rather than the agent only knowing what’s in the user prompt or its short-term memory, it can fetch additional context in structured ways, enabling more complex and situationally aware behavior.

- Bing Search Grounding – Instead of a generic web search tool, Foundry now encourages using Bing Search grounding (with an official Bing Search tool integration) for agents that need internet information. This preview feature means an agent can perform a live Bing web search and use the results to answer user queries. Crucially, the search results can be cited or used to ground the agent’s answer in real data, which helps reduce hallucinations and increases answer traceability. By having the agent provide sourced information from Bing, enterprises can get more trustworthy outputs for scenarios like question answering or research assistance. (The older generic “web search” tool is not supported in the current Responses API setup; Bing Search with grounding is the recommended approach.)

These tools are in early preview, and while each is useful on its own, together they hint at Azure AI Foundry’s direction. Microsoft is expanding Foundry from just serving models to building a rich ecosystem for agentic applications – where an AI agent can reason (with long context and planning), use external tools (code execution, web browsing, search, custom APIs), and remain grounded in factual data. All of this is managed under a unified platform with enterprise-grade security and monitoring. As these preview tools mature, developers will be able to create more autonomous agents that combine reasoning, action, and knowledge retrieval seamlessly. Even now, early adopters in the preview can experiment with, say, an agent that searches the web and writes code to solve a task, then uses a custom capability host to update a database – all orchestrated within Foundry’s agent framework. It’s a glimpse of how AI-powered workflows might evolve, with Azure AI Foundry positioning itself as a leading platform for building and scaling these complex AI agents.

Have a Question ?

Fill out this short form, one of our Experts will contact you soon.

Talk to an Expert Today

Call Now